A nice feature of LyX is that you can edit \included (or \inputed) files from the Insert→File→Child Document dialog window. I was recently having a problem, however, where I could edit file types that had notepad as the default handler but not those that had Notepad++ as the default handler. I would get dialog messages saying "Error: Cannot edit file \n Auto-edit file file_path.tex failed". If this happened to you, here's the reason and fix.

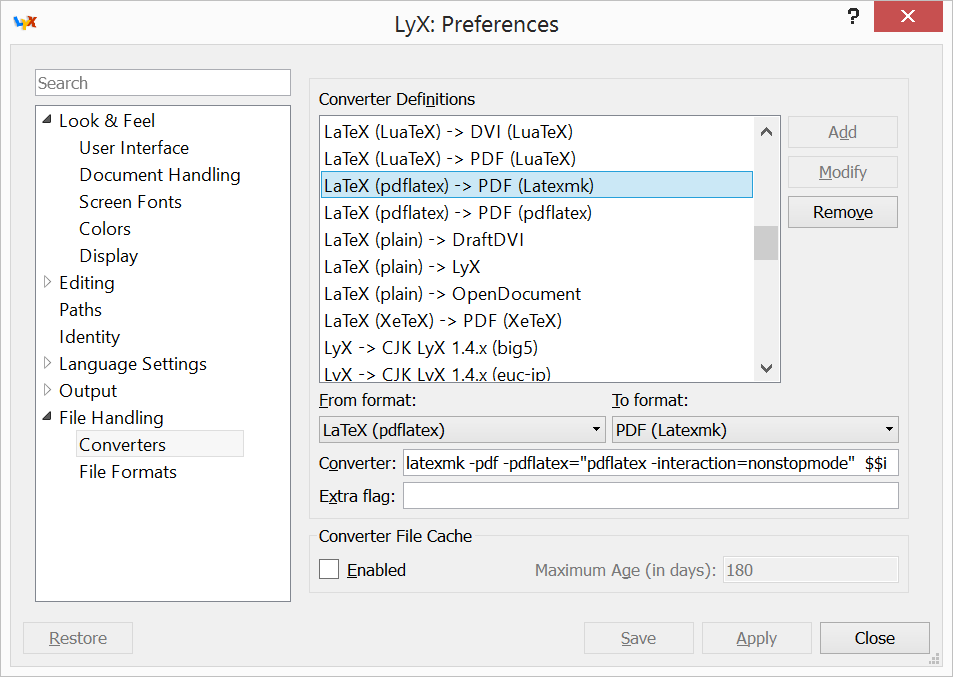

Unless the child document is a .lyx file, LyX will check to see which handler is associated with text files in Tools→ Preferences→ File Handling→ File Formats, "Plain Text". If this is set to "Custom:auto", then LyX will call Win32's ShellExecute() with the action/verb as edit. The problem my setup is that if you merely set Notepad++ as the default handler of a certain file type by right clicking on the file type and selecting Open with→ Choose Another App→ Use this App to open all XXX files then the application isn't registered as the handler with the edit verb. Possible solutions

Unless the child document is a .lyx file, LyX will check to see which handler is associated with text files in Tools→ Preferences→ File Handling→ File Formats, "Plain Text". If this is set to "Custom:auto", then LyX will call Win32's ShellExecute() with the action/verb as edit. The problem my setup is that if you merely set Notepad++ as the default handler of a certain file type by right clicking on the file type and selecting Open with→ Choose Another App→ Use this App to open all XXX files then the application isn't registered as the handler with the edit verb. Possible solutions

- Set Notepad++ to be editor for all included files. Do this by setting the edit command for Plain Text files as "C:\Program Files (x86)\Notepad++\notepad++.exe". This is what I did

- LyX could be patched so that if the first call to ShellExecute() in osWin32.cpp:autoOpenFile() with edit failed with error code SE_ERR_NOASSOC (=31) to try again with a verb of NULL.

- The user can register an edit verb with the default file handler in the registry. See here and here.